Bayesian Inference 101: One-Shot-Thinking & How to Catch Your Own Confirmation Bias

Overcoming Cognitive Bias with Bayes’s Rule — from Superyachts to Everyday Decisions

Thomas Bayes > Quantifying Our Beliefs

More than 250 years ago, Thomas Bayes devised a simple yet powerful rule for updating beliefs when new information arrives. Bayes never saw his famous theorem in print; his friend Richard Price edited and presented the essay posthumously to the Royal Society in 1763. His 18th-century insight — combining your “prior” hunch with the strength of fresh evidence — still underpins everything from Microsoft’s first Bayesian spam filter to Netflix’s recommendation algorithms and modern medical-diagnostic tools. Even in 2018, the University of Edinburgh opened a £45 million “Bayes Centre” to drive cutting-edge data-science research - proof that an 18th-century idea still powers 21st-century innovation.

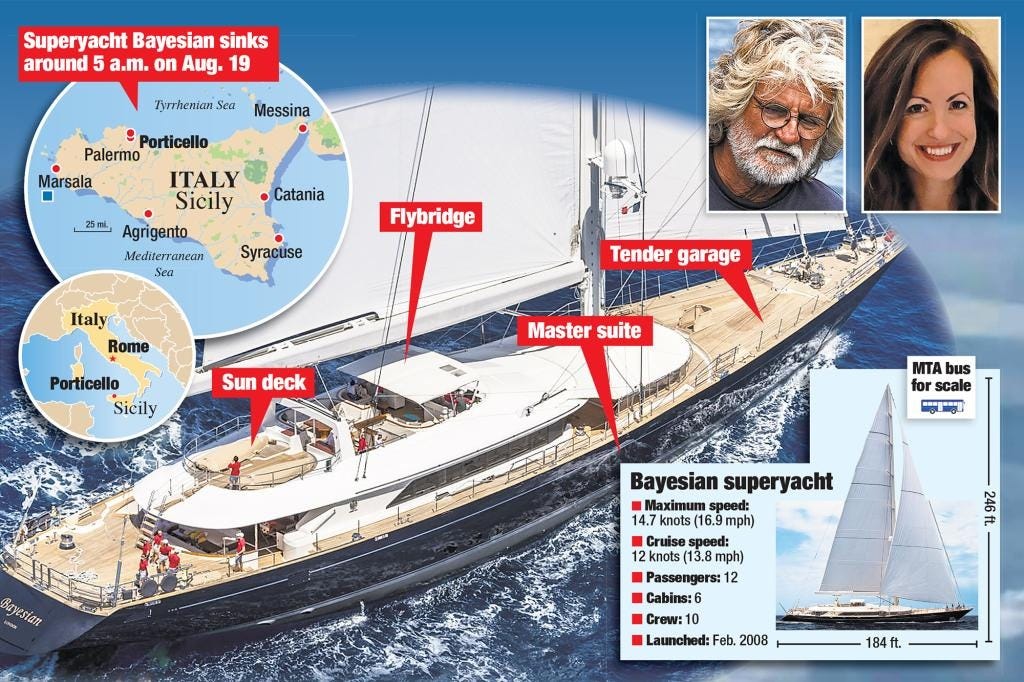

In the past few days, a superyacht ‘Bayesian’ has been resurfaced after its tragic sinking a year ago. British billionaire Mike Lynch, 59, his daughter Hannah, 18, and five others died after the 56-metre (184ft) Bayesian sank off Porticello, Scicily, on 19 August 2024. The yacht was owned by Mike Lynch, founder of Autonomy Corporation. The yacht Bayesian as a direct homage to Bayesian inference—the statistical framework central to Autonomy’s machine-learning and data-analysis tools, reflecting Lynch’s public pride in probabilistic reasoning and his company’s pioneering use of Bayesian methods in enterprise software.

The Problem: One-Shot Thinking

All too often, we settle on the first story or explanation that pops into our heads and never look back. Imagine you blew a job interview and immediately conclude, “I’m too inauthentic to compete.” Or you find a parking ticket on your windscreen and think, “That warden must have had it in for me.”

This snap-judgement style — what I’ll call one-shot thinking — ignores the fact that a single outcome often has multiple plausible explanations. By jumping to our favourite narrative, we invite errors, self-criticism and blind spots into our reasoning.

Cognitive Bias Primer

Before we learn how to weigh alternatives, let’s meet three mental shortcuts that steer us off course:

Anchoring

We cling to the first figure or idea we hear.If someone mentions a salary of £30 000, every later offer feels high or low compared to that anchor—even if the true range is £35–45 000.

Availability

We judge how common an event is by how easily examples come to mind.After watching a news story about a plane crash, you might overestimate the danger of flying—despite its safety record.

Confirmation Bias

We notice only the facts that back our favourite theory and ignore the rest.If you believe your colleague dislikes you, you’ll remember the one curt reply and glaze over the five friendly chats.

Pause for a moment: which of these biases sounds most familiar in your own life? By spotting them early, you can force yourself to ask, “What else might explain this?”

From One-Shot Judgements to Echo Chambers

Social media algorithms

Modern social-media algorithms actively exploit these same biases by curating our feeds to match our existing preferences, creating so-called “filter bubbles” that reinforce our initial assumptions. This personalised curation narrows the range of perspectives we see, intensifying confirmation bias and deepening echo chambers (Pariser, 2011; Zollo & Quattrociocchi, 2017). Research studies show that algorithmic ranking dramatically reduces exposure to counter-attitudinal content, making it harder to encounter — and therefore consider — alternative explanations (Bakshy, Messing, & Adamic, 2015; Nikolov et al, 2015). Even when we follow a diverse set of sources, ranking engines still prioritise familiar narratives, steepening the walls of our mental echo chambers. As a result, our feeds become a steady stream of agreeable viewpoints — much like a pleasurable fix — making it ever more difficult to step back, question our first impressions, and update our beliefs objectively (Pariser, 2011).

Enter… Bayesian inference!

Bayes’ Theorem: Posterior, Evidence & Prior

Before we run through a concrete example, let’s label each piece of Bayes’ rule in plain English. Note that the ‘P’s in the formula are probabilities or likelihoods ranging from 0 to 1. We can also understand these Ps as degrees of confidence in our hypotheses or beliefs (H). For example, if P (H) is 1.0, then we know this hypothesis H with certainty - e..g P(H) = 1 if H is ‘2+2=4’.

The Prior (P (H))

Your initial belief about how likely hypothesis H is, before seeing any new evidence.The Evidence / Likelihood (P (E | H))

How likely you would be to observe the evidence E if hypothesis H were true. This is a ‘conditional probability' - how probable is the data assuming the hypothesis is true))The Normaliser (P (E))

The overall chance of seeing that evidence E under all possible hypotheses — essentially a rescaling factor so that your updated beliefs add up to 100 %.The Posterior (P (H | E))

Your updated belief in hypothesis H after taking the evidence E into account.

In many practical discussions — especially when comparing multiple hypotheses—the denominator P(E) is understood as the scaling factor and dropped from notation. You’ll commonly see Bayes’ theorem written as::

You can also call this Bayes’ theorem — the heart of Bayesian reasoning that turns your prior beliefs and new evidence into updated, coherent levels of confidence in your updated beliefs in the light of evidence. This captures the core ideas of this type of fluid intelligence based reasoning.

Iceland Weather Example

Imagine you’re on holiday in Reykjavík and you check the forecast the night before:

Prior P (H)

The weather app forecasts a 70 % chance of rain.Your prior belief (P (rain)) = 0.7 (this is H1)

Your prior belief (P (no rain)) = 0.3 (this is H2)

We are using the Bayes rule for both these hypotheses (beliefs), not just one. We apply the rule to use our fliuid intelligence to think analytically about different possible explanations rather than just trusting our initial instincts about what the morning will bring on our holiday. Generating alternatives is super important if we want to be smart.

New Evidence P (H |E)

When you wake up, you look out the window and see clear blue sky with no clouds.How likely is it to see a bright sunny morning if it really will rain later (H1)? Probably low — maybe 20 %. (Think carefully about diagnosics here.)

How likely is it to see a sunny morning if it won’t rain (H2)? Quite high — say 90 %. (Think carefully about diagnosics here too.)

These are your ‘Evidence’ estimates:

P (Evidence | rain) ≈ 0.2

P (Evidence | no rain) ≈ 0.9

Unscaled Scores

Multiply each prior by its likelihood:rain: 0.7 × 0.2 = 0.14

no rain: 0.3 × 0.9 = 0.27

Normaliser

Sum these scores to rescale them into valid probabilities:P (Evidence) = 0.14 + 0.27 = 0.41

Posterior / Updated Belief P(H | E)

Divide each unscaled score by the normaliser (you could use your phone calculator for this):P (rain | sunny morning) = 0.14 ÷ 0.41 ≈ 0.34 (34 %)

P (no rain | sunny morning) = 0.27 ÷ 0.41 ≈ 0.66 (66 %)

Why compare?

Because we’ve now computed a posterior probability for each of our two hypotheses, we can directly see which one the evidence supports more strongly. In this case, a sunny morning makes “no rain” nearly twice as likely as “rain,” so we can be most confident in the no-rain hypothesis.

What has been inferred?

Even though the forecast said 70 % rain, seeing clear skies this morning makes “no rain” now twice as likely as “rain.” The normaliser (0.41) simply rescales your new scores so they add up to 100 %, giving you coherent updated odds.

So, running through this process, you have a solid rational basis for going out on a hike rather than going to a museum that morning! Trust in the process!

Your Bayesian Mindware

In this section (free template linked below), we’ll walk through a simple “Prior × Likelihood → Normalise” exercise to see Bayesian inference in action — and catch our own confirmation bias in the act.

Combining this kind of “offline” analytical thinking with working-memory n-back training is a cornerstone of IQ Mindware’s evidence-based brain-training approach for increasing intelligence.

What it is: A purely in-your-head “tree walk” script where you fill in an initial belief and evidence, conjure 2–3 alternatives, assign rough priors & likelihoods mentally, and rank posteriors — all while calling out any biases.

Why practice it: By holding multiple stories in working memory and running through the ×-and-normalise steps yourself, you train fluid-intelligence skills and strengthen your ability to think clearly without any tech crutch.

Here’s a self-guided mental script you can work through entirely “in your head” to turn any piece of evidence and an intuitive belief into a small Bayesian reasoning tree. Wherever you see [blank], fill in your own content. At each step you’ll be prompted to spot common biases and counter them.

Bayesian Inference Mindware

Notice the Evidence

Quietly focus on the fact or event you want to explain.

Write (or say silently): “My evidence is: [E].”

Example: “I failed that job interview.”

Bias check: Do you feel a rush of self-criticism or drama? That could be negativity bias; gently remind yourself you’re only collecting data, not judging yourself.

State Your Initial Belief (Hypothesis 1)

Write: “My first hypothesis is: H1 = [my gut belief].”

Example: “At my age, I’m unemployable.”

Bias check: Is this belief influenced by anchoring (e.g. age) or availability (recent stories you’ve heard)? Note it.

Generate Alternatives

Without judging them, come up with 2 or 3 other plausible explanations:

H2 = [alternative]

(Optional) H3 = [alternative 3]

Example alternative:

H2 = “It’s a competitive job market and there was a weakness in my skill-set.”

Visualise H1 and H2 as a branching tree of options in your minds eye.

Bias check: Watch for confirmation bias — don’t default to alternatives that are variations of H1. Force yourself to think of genuinely different explanations.

Assign Rough Priors in Your Head

Ask: “Before I knew about the interview result, how plausible was each hypothesis?”

Give each a quick mental weight adding to 1.0 (e.g. 0.5 + 0.3 + 0.2).

P(H1) = [ ]

P(H2) = [ ]

Bias check: Are you overweighting H1 because it feels “right”? Try to adjust if you catch yourself.

Estimate Likelihoods of the Evidence

For each Hᵢ, ask: “If Hᵢ were true, how likely would I have failed that interview?”

P(E | H1) = [ ]

P(E | H2) = [ ]

… (if there are other Hs)

Use simple labels if you like—High (≈0.8–1.0), Medium (≈0.4–0.7), Low (≈0.0–0.3).

Bias check: Beware overconfidence — if you’re too certain, dial it back.

Compute Unnormalised Scores

In your head multiply priors × likelihoods for each hypothesis:

score₁ = P(H1)×P(E | H1)

score₂ = P(H2)×P(E | H2)

…

Tip: You can approximate by thinking “0.5×High” as “around 0.4,” etc.

Normalise & Compare

Sum = score₁ + score₂

Posterior for each = scoreᵢ ÷ Sum

In your mind, rank them: “Now H₂ is about 50 %, H₁ is 30 %

Bias check: Notice if you still cling to H₁ — recognise belief perseverance, then let the numbers speak.

Draw Your Conclusion

Silently state: “Given how plausible each story was and how well it predicts what happened, my best explanation is H2 (…or whichever).”

If the top hypothesis still feels “wrong,” ask yourself what further evidence could settle the gap.

Plan a Reality-Check

Identify one action to gather more data:

E.g., “Next time, I’ll ask the recruiter for feedback on whether I am lacking any key skills.”

This turns reasoning into experiment, further reducing bias.

Bayes Mindware Exercises

For each scenario, assign prior probabilities to your hypotheses (based on background knowledge) and estimate how likely new evidence would occur under each hypothesis (the likelihood). Then use Bayes’ theorem as described above.

The more you practice, the easier and more automatic the skill with this mindware becomes.

1. Backward-Looking Explanations

Retrospective explanations often fall prey to hindsight bias, where we latch onto the first story that comes to mind rather than weighing multiple possibilities.

Example 1: A Friend Didn’t Return Your Call

Hypothesis A: They were busy at work and simply forgot.

Hypothesis B: They’re upset with you after your last conversation.

Hypothesis C: Their phone battery died, so they didn’t see the call.

Example 2: Your Keys Have Gone Missing

Hypothesis A: You lent them to a relative and forgot.

Hypothesis B: They slipped out of your pocket at the café yesterday.

Hypothesis C: You accidentally left them at the office desk.

2. Forward-Looking Beliefs

Forecasts about the future often reflect overconfidence in our internal models; Bayesian updating keeps us honest by forcing explicit priors and likelihoods.

Example 1: “I’ll Get That Promotion”

Model A: Your performance review was stellar, boosting your odds.

Model B: The company seldom promotes more than one person per year.

Model C: A recent reorganisation could delay all promotions.

Example 2: “My New Plant Will Survive”

Model A: It’s a hardy species with a 90 % survival rate under similar care.

Model B: You’ve been inconsistent with watering, reducing chances to about 50 %.

Model C: Recent pests in your area make survival only 30 %.

Summary

Use this mental template any time you catch yourself making a snap judgment. By forcing yourself to hold multiple stories in working memory, to spot anchoring or confirmation bias, and to do a quick “∝ prior×likelihood” check, you train your mind toward clearer, more objective conclusions—no AI needed.

Ready to go deeper?

If you found this free walkthrough useful, imagine what you could achieve with full access to our Pro toolkit — complete AI-powered prompts, multi-hypothesis templates, and downloadable cheat-sheets designed to supercharge your Bayesian reasoning.

👉 Upgrade to a paid subscription and get instant access to the “Bayesian Reasoning with AI” deep-dive and all future premium content.

References

Bakshy, E., Messing, S., & Adamic, L. A. (2015). Exposure to ideologically diverse news and opinion on Facebook. Science, 348(6239), 1130–1132.

Brugnoli, E., Cinelli, M., Quattrociocchi, W., & Scala, A. (2019). Recursive patterns in online echo chambers. EPJ Data Science, 8(7).

Nikolov, D., Oliveira, D. F. M., Flammini, A., & Menczer, F. (2015). Measuring online social bubbles. EPJ Data Science, 4(1), 1–16.

Pariser, E. (2011). The filter bubble: What the Internet is hiding from you. Penguin Press.

Zollo, F., & Quattrociocchi, W. (2017). Echo chambers and viral misinformation: A study on Facebook. PLOS ONE, 12(11), e0181531.