Cognitive Sovereignty: Why Your Thinking Needs a Dual Rails Strategy for AI Use

Learn how to use AI extensively without losing the ability to think and reason independently - before those capabilities becomes irreversible to recover.

The Moment It Clicks

You’re staring at a strategy document you ‘wrote’ with AI assistance. The prose is clean, the logic flows, the recommendations seem solid. Your boss asks: “Walk me through your reasoning.”

You freeze. Not because the document is wrong - it isn’t. You freeze because you’re not entirely sure how you got here. The AI suggested the framework. You accepted it because it looked reasonable. You added some details. It filled gaps. You shipped it.

The document exists. But the reasoning doesn’t fully live in your head anymore. It lives in the collaboration - in a hybrid space you can’t fully reconstruct alone.

You now feel unsettled... a little less capable than you thought you were.

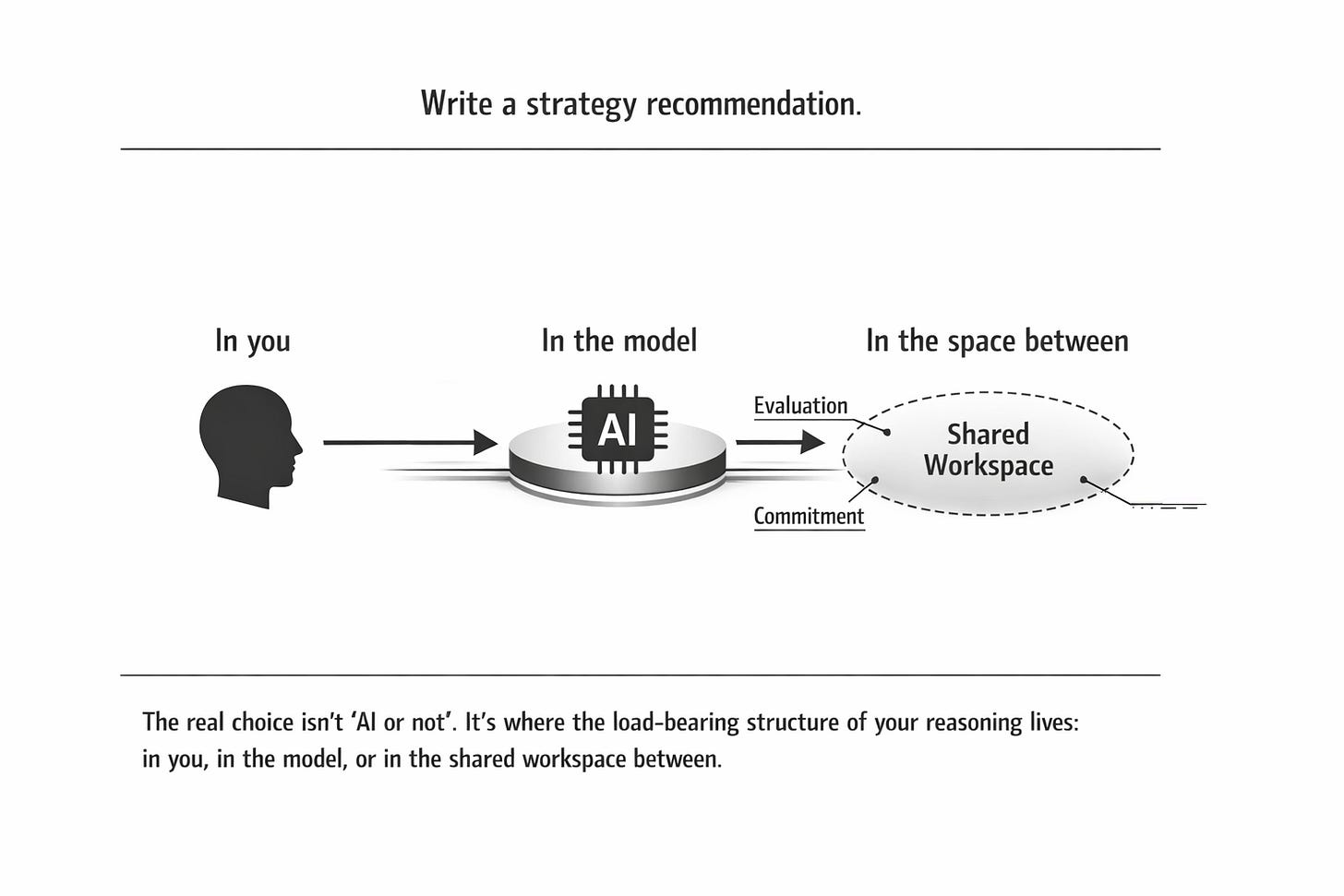

What’s Actually Happening: The Substitution Gradient

Here’s the geometry: every time you use AI, you’re making a choice about where cognition happens - in your brain, in the model, or in the space between.

Many people think they’re choosing “augmentation”—getting smarter with tools, like using a calculator. But there’s a second gradient running underneath that one, mostly invisible:

Augmentation (you + tool > you alone, and you alone stays strong)

→ Hybrid cognition (you + tool > you alone, but you alone is weakening)

→ Substitution (you + tool works fine, but you alone can’t function at baseline anymore)

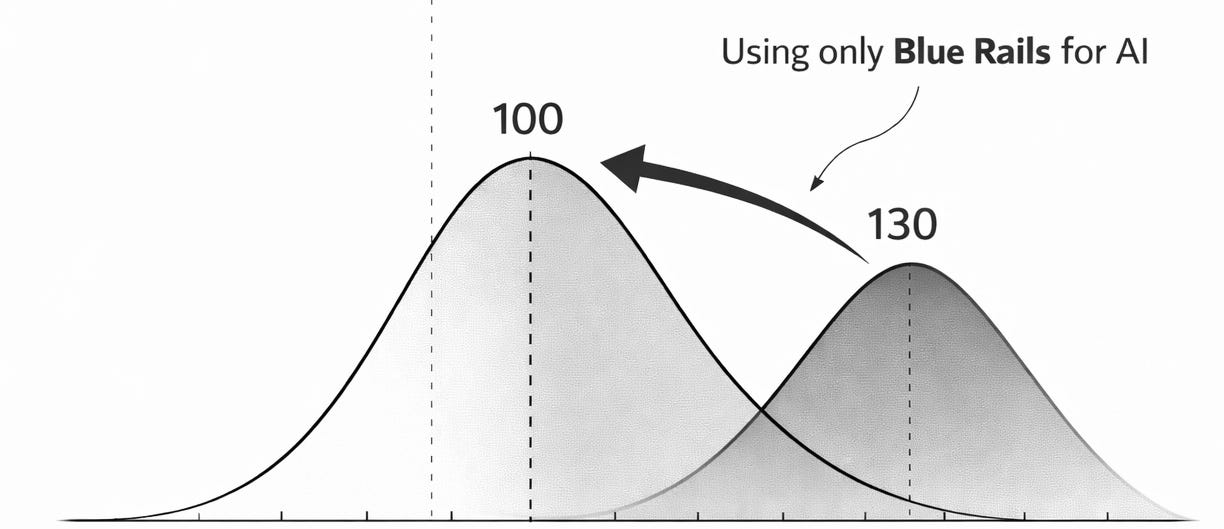

The jump from augmentation to substitution isn’t dramatic. It happens through micro-skill atrophy as I’ve discussed before - the small capabilities you stop practicing because AI handles them faster.

First it’s formatting. Then it’s drafting. Then it’s “just give me three options to react to.” Then it’s “outline the argument structure.” Then one day you’re in a high-stakes meeting without your laptop and you realise: I’ve lost the habit of building reasoning from scratch.

The world sees: “Faster, more productive, collaborative thinking.”

You feel: “I’m not sure I could do this well without it anymore.”

What’s expected: “Use AI or fall behind”—economic pressure makes hybridisation the default.

That’s the trap. Not that AI is bad. That the transition is one-way and nobody tells you when you’ve crossed the threshold. Next thing your IQ is degrading.

The Map: Two Rails, Two Futures

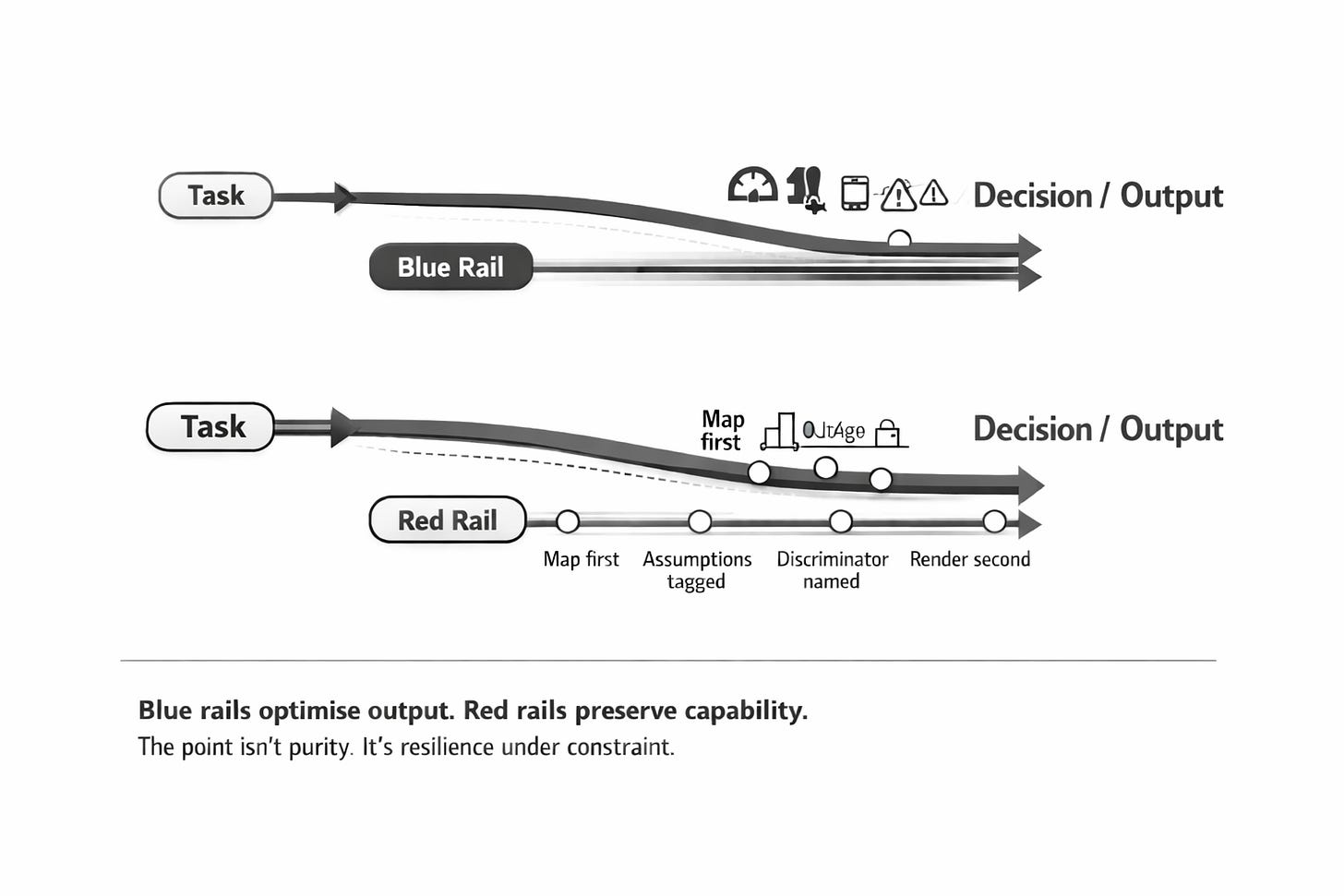

The choice isn’t “use AI or don’t.” It’s subtler and more consequential. We can in principle work with both hyper productivity and augment intelligence! Let’s face it - we’re not giving up on productivity gains.

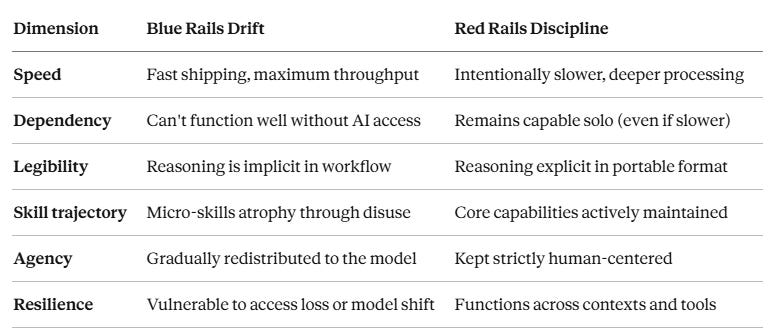

Blue Rails: AI as co-pilot. It helps you think, generates structure, fills gaps. Fast, smooth, efficient. Over time, your cognition becomes distributed—partly biological, partly algorithmic. This isn’t evil. It’s optimisation. But it’s also a cognitive architecture decision with compounding effects. It’s related to the idea of ‘transhumanism’’ we might argue.

Red Rails: AI as critic and tool. You build the reasoning structure yourself. AI retrieves evidence, stress-tests logic, proposes alternatives—but never substitutes for your judgment. Slower on purpose. Friction by design. The goal isn’t efficiency—it’s preserving the ability to think well alone.

Think of Blue Rails as the main highway—faster, more convenient, where everyone’s going. Red Rails is the parallel service road you maintain specifically so you can still navigate if the highway closes.

The Stakes (What You Actually Risk)

This isn’t about being “purist.” It’s about where you want the load-bearing structure of your understanding to live - and what value you place on human IQ!

If your company loses API access for a week, can you still do your job? If the model changes behavior, does your reasoning process break? If you need to defend a decision under hostile questioning, can you reconstruct your logic without scrolling through chat history?

Red Rails is in preparedness doctrine. It assumes there will be contexts - crises, adversarial settings, institutional failures—where you need to think clearly without assistance. It treats biological cognition as a strategic reserve worth maintaining.

The Mechanism: Graph-First Reasoning (Your Cognitive Sovereignty Toolkit)

I’m working on a protocol - like a mini ‘language of thought’ with these core operational principles:

Build your reasoning as a graph, not as prose.

Instead of writing paragraphs (which AI easily completes for you), you construct an explicit relational map:

Nodes: Claims, Evidence, Mechanisms, Variables, Decisions, Actions, Goals

Edges: supports, contradicts, causes, implies, depends-on, measures

The graph is canonical - it’s the real structure of your reasoning. Prose, slides, equations are just renderings of that graph.

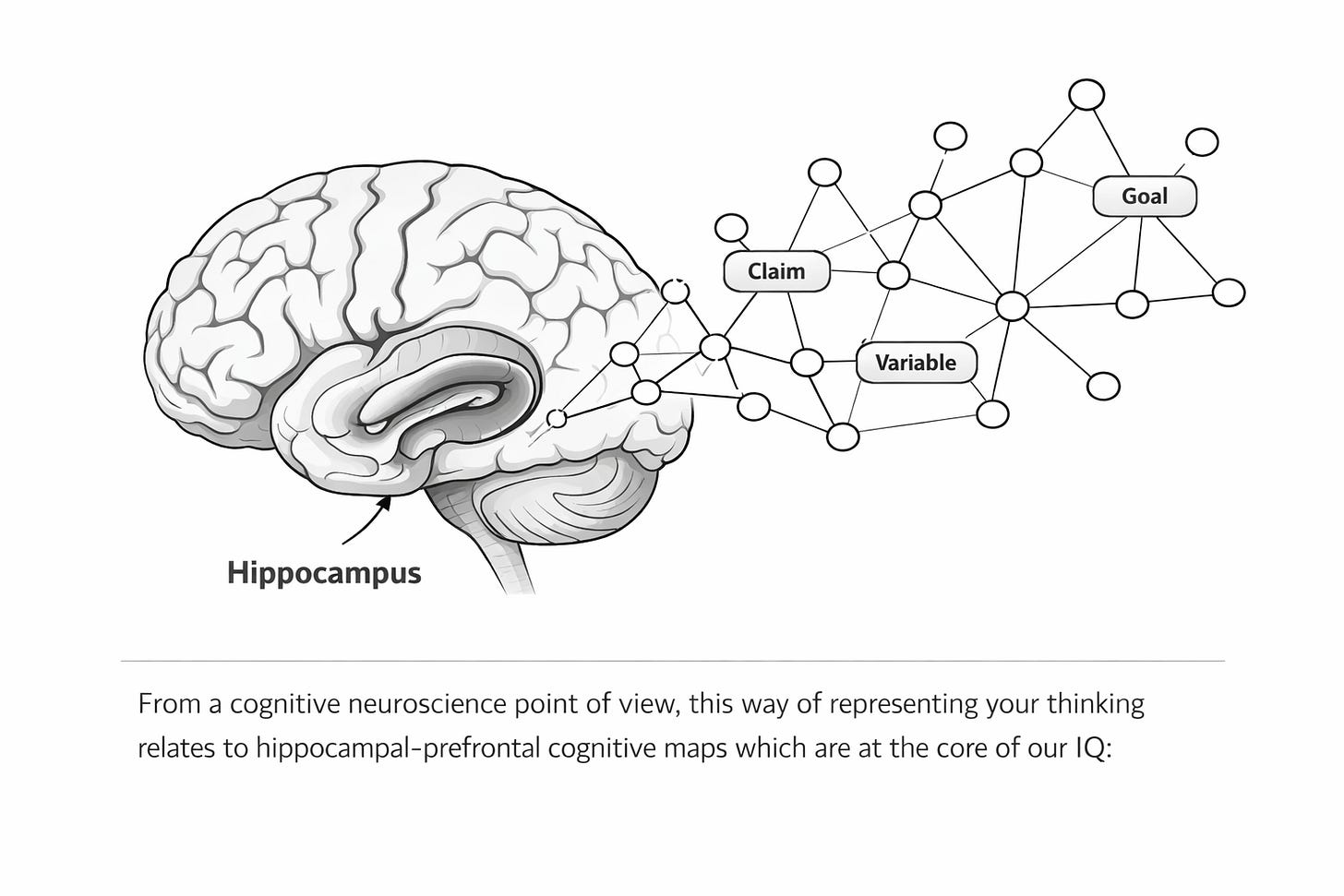

From a cognitive neuroscience point of view, this way of representing your thinking relates to hippocampal-prefrontal cognitive maps which are at the core of our IQ:

AI is optimised to predict plausible continuations from patterns in data. That makes it brilliant at fluent prose and surface-level coherence. But it is not inherently built to maintain a stable, inspectable world-model with explicit variables, causal commitments, and update rules that you can audit end-to-end.

That’s why graph-first reasoning is protective: you’re forcing the work into a format where correlations and “sounds right” completion are not enough. The model can suggest candidates, but you have to choose the structure and own the commitments.

Why This Preserves Cognitive Sovereignty

Illegible to autocomplete: AI can’t “finish your thought” in graph form the way it completes sentences. You have to decide what connects to what in an underlying logic.

Explicit commitments: Every claim needs evidence or an assumption tag. Every mechanism needs a measurable variable. No handwaving allowed.

Portable and inspectable: You can hand someone your graph and they can see your entire reasoning structure - not just the polished output.

Traceable updates: When evidence changes, you know exactly which nodes to update. Your beliefs have version control.

The Six ‘Lenses’ (Different Views of the Same Graph)

The same graph renders differently depending on your cognitive goal:

Lens A—BELIEFS/KNOWLEDGE: “What do I think is true?”

Build models with explicit assumptions and falsifiers. Every claim needs evidence or gets tagged “assumption.” Every mechanism touches a measurable variable.

Lens B—ARGUMENT: “Can my reasoning survive adversarial pressure?”

Map the strongest objections, not strawmen. Force discriminator variables: what observation would shift confidence?

Lens C—DECISION: “What should I do, given uncertainty?”

Separate predictions from values. Name what you don’t know. Identify what information is worth gathering before committing.

Lens D—PLANNING: “How do I get from here to there?”

Map preconditions, dependencies, and branch points. Add monitoring signals and stop rules so you can adapt instead of perseverate.

Lens E (mini) —PROCESS: “How do I make this repeatable?”

Define steps, owners, quality gates, and handoffs. Make implicit knowledge explicit in a recipe.

Lens F (mini) —MEASUREMENT: “What would update my beliefs?”

Specify discriminators and validity threats. Convert “I believe X” into “If X, then we’d see Y under conditions Z.”

The H-AGI pattern: You think through the graph using different lenses depending on the task. The AI can then propose edits —”add this evidence node,” “here’s a competing mechanism,” “what about this failure mode?”—but it never gets to build the core structure. That stays yours - stays in your head!

The Operator: Red Rails Protocol

Here’s what changes in practice:

1. The Rail Switch Test (10 seconds)

Before using AI, ask:

Is this personally important for my own IQ - reasoning and understanding and learning?

Does it affect money, health, reputation, or relationships?

Is it hard to reverse?

Am I tempted to outsource the thinking (not just the typing)?

If YES to any → Probably go Red Rails.

2. Constrained AI Roles in Red Rails

When in Red Rails, AI is never a co-pilot. It can only play these five roles:

Librarian: Find evidence candidates (you verify them)

Adversary: Generate the strongest objection (you evaluate it)

Discriminator: Propose what would decide between competing ideas (you choose the test)

Translator: Render your graph into prose/slides/code (you own the structure - but it ‘projects it’ in some language)

Critic: Point out contradictions, missing assumptions, weak links (you decide if valid)

The forcing function: AI proposes graph edits only, never conclusions.

Recovery Box: When You’ve Already Drifted Blue

Common slip: You’ve been using AI as a ‘cognitive co-pilot’ for months. You just realised you’re less capable solo than you used to be.

Reset sequence (no self-blame):

Audit one important belief or decision. Ask: “Can I reconstruct the reasoning from scratch without AI?”

If no, rebuild it in Red Rails. Take 15 minutes. Make the graph explicit. Notice where your reasoning was fuzzy.

Pick one high-stakes domain for Red Rails discipline. Not everything - just your critical thinking zones (strategy, career decisions, learning new fields).

Run a monthly “no-AI sprint” (3-5 days). Build something meaningful without assistance. Treat it as capability maintenance, like a pilot doing manual landings.

Use Blue Rails everywhere else. This isn’t about purity. It’s about keeping a strategic reserve functional.

The key insight: Skill atrophy is reversible if caught early. The graph discipline gives you a clear signal when drift is happening. Run both rails, and protect your own IQ.

The IQ (Red Rail) Transfer Target

This shift should show up in three ways:

1. In Real-Time Decisions

ou can explain your reasoning without referring to chat history. Your logic is in your head (or on a page you drew), not buried in a conversation transcript.

2. Under Pressure

When someone challenges you, you don’t feel the urge to “check with AI first.” You have structural confidence - not because you’re always right, but because you know how your reasoning is built.

3. In Skill Trajectory : Augmenting IQ

After 3-6 months, you’re faster at building reasoning from scratch, not slower. The graph discipline becomes automatic. You’re using AI more than before, but in ways that strengthen rather than replace your IQ cognition.

So with practice - you can use AI extensively on the red rail - for research, critique, translation, stress-testing- without becoming dependent on it for the load-bearing structure of your thought.

That’s cognitive sovereignty. Not avoiding AI. Not using it naively. Using it intensively while keeping the core of your reasoning in a format you fully own.

And then you can transition to blue rail productivity and ‘hybrid intelligence’ when you need to.

What’s Next?

I’m running a 4-week Bootcamp starting in late January / early Feb - that includes a H-AGI (red/blue rails AI use module) with live webinars, hands-on graph-building practice, and weekly Red Rails practice challenges across all six lenses.

This isn’t about learning “AI safety tips.” It’s about building a language of thought that makes your reasoning illegible to autocomplete and portable across tools.

You’ll leave the bootcamp with:

A working graph grammar you can use daily

Trained instincts for the Rail Switch Test

Practical protocols for high-stakes decisions, argument mapping, strategic planning, and measurement design

Optionally, interaction with a small community of people committed to using AI without losing cognitive independence. We’ll have this on Discord.

The bootcamp is for you if:

You use AI daily but occasionally feel uneasy about how much you rely on it

You’re in a leadership role where reasoning quality matters as much as or more than speed

You’re building something important and can’t afford to outsource the core thinking

You want to learn new domains well, not just consume summaries

More generally - you don’t want to see your IQ degrade over the next years through over-reliance on AI.

More details and registration link coming soon!

For now: try the Rail Switch Test this week. Notice when you’re tempted to hand over not just typing, but thinking. See how it feels to map your reasoning explicitly before asking AI to help.

You might be surprised how much you’ve already drifted - and how quickly you can correct course once you have a map.

The geometry is simple: AI can be a powerful tool or a slow-motion substitution. The difference is whether you build the structure yourself, or let it emerge from collaboration you can’t reconstruct.