Introducing Hybrid Human-Artificial General Intelligence (H-AGI): Part 1

Don’t Let AI Flatten Your Thinking — Hybridise It!

Abstract

This article introduces Human–AI General Intelligence (H-AGI): a human-led way to think with AI that builds, rather than blunts, intelligence. Part 1 sets the case — passive AI use can erode active thinking, and today’s models excel at patterns but struggle with causal understanding. We then outline a field-ready, 4-beat rhythm—Diverge → Compress → Stress-test → Reframe—derived from my G-Loop, with a clear split of roles: humans lead abduction and deduction; AI handles induction and tooling (search, compression, quick sims). We add ‘guardrails’ (e.g. agency, and a create↔control balance). Part 2 applies the loop to a live problem: boosting the impact of high-quality articles.

Taxonomy

HGI — Human General Intelligence (baseline human agent).

H-AGI — Human–AI General Intelligence (hybrid, human-led; this work).

AGI — Artificial General Intelligence (machine-only generality).

The rationale for developing the idea of H-AGI is based largely on two recent articles in the AI space:

Article 1: Active Thinking vs Vegetation

What really happens to your brain when ChatGPT helps you write essays? A new MIT study took a look inside our heads (literally) and the results are important for those of us concerned with maintaining and improving general intelligence. (link):

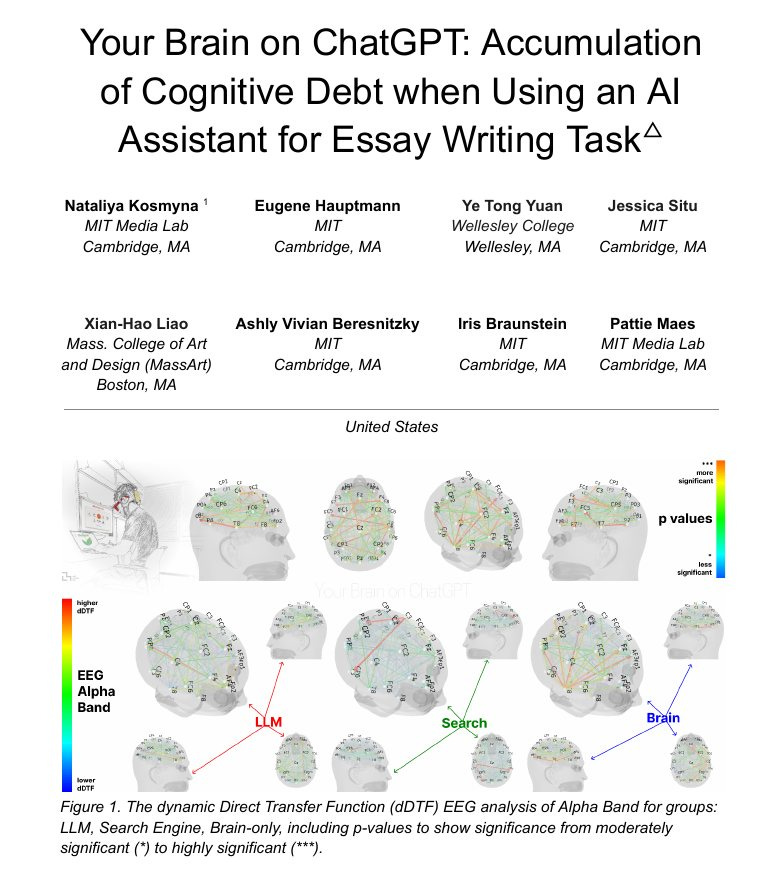

Participants wrote essays in three ways: (1) independently (‘Brain’ group), (2) with a search engine (‘Search’ group), or (3) with ChatGPT (‘LLM’ group). After a few sessions, they swapped methods: AI users had to go tool-free, and independent writers got a taste of AI.

The findings? Brain scans (EEG) showed the most activity and strongest connections when people wrote unaided (see figure above). Search engine users came in second. But AI users? Their brain activity was lowest, especially in areas linked to focus and deep thinking, creativity.

Even more interesting, when former AI users had to write without help, their brain activity dropped further, as if their minds had adjusted to doing less work. Meanwhile, independent writers who switched to AI kept high engagement, possibly because they were actively directing the use of the AI, rather than passively offloading their thinking.

There were other warning signs. AI users felt less ownership of their essays, struggled to recall what they had written, and produced work rated weaker by both human judges and automated scoring.

The conclusion from this study is that while AI can speed things up, over-reliance on it might chip away at your thinking and writing skills. This study isn’t the final word of course. More research is needed. But it’s a useful reminder: AI should be a thinking partner, not a brain replacement!

Article 2: Causality & Logic vs Surface Prediction

Can today’s best generative AI models actually learn how the world works — or are they just superb mimics of surface patterns? A new paper by Keyon Vafa and colleagues says the latter.

Title: What has a foundation model found? — Vafa et al. (arXiv)

Foundation models (e.g., ChatGPT, Gemini) are pre-trained on massive datasets and then fine-tuned for specific tasks. The open question is whether they move beyond correlations to build genuine ‘world models’ — compact, causal rules that explain and predict.

Vafa’s test is elegant. Give a model a tiny ‘toy world’ (think: a simplified solar system), fine-tune it, and ask for two things: (1) predict the next datapoint and (2) state the underlying rule that generates the data (e.g., the gravity law). Result? The model nails prediction —but then proposes a bizarre, data-specific heuristic rather than the simple causal rule you and I would write down. It imitates the curve; it doesn’t discover the cause.

Where it struggles

Deduction: What looks like logical reasoning is often high-dimensional pattern recall, not rule manipulation (‘All A are B…’ applied as a schema).

Abduction: It lacks inference-to-the-best-explanation — the creative leap to a concise causal hypothesis or mechanism.

Why that matters: Science (and everyday problem-solving) runs a hypothetico-deductive loop: abduce a hypothesis → deduce predictions → test. Without real abduction and deduction, the loop never fully closes, so generalisation breaks when conditions shift.

A quick mental picture: one map shows every pothole (data patterns); another shows the road network (causal structure). When the bridge is closed, only the second helps you re-route.

Takeaway: Foundation models are astonishing pattern engines. Treat them as such. Use them to compress and search, but keep humans in the driver’s seat for causal modelling, hypothesis generation, and testing. That’s where genuine understanding still lives.

Human AI General Intelligence (H-AGI)

Article 1 warned against vegetating with AI, and Article 2 showed why current models plateau at surface prediction, this section is the antidote: a simple, repeatable strategy for thinking with AI that makes you smarter over time.

We can think of this as human-AI hybrid intelligence - but ensuring it is human led and promotes self-efficacy - the sense of agency and effectivness that is essential for higher intelligence.

Human vs AI: who does what (and why)

Human leads abduction & deduction.

Abduction = the leap to the best explanation (your “why” guess).

Deduction = what must follow if that guess is true (predictions and checks).

That’s where real understanding — and judgement — live.AI does induction & tooling.

Induction = pattern-finding from many examples.

Tooling = search, compression, retrieval, quick simulations.Think of AI as a critic, librarian, and toolsmith — not the theorist or decider.

This split is the core of H-AGI: a human-led hybrid where your mind stays in charge and the model extends your reach.

The H-AGI loop

The Loop involves:

Diverge → Compress → Stress-test → Reframe

We’ll apply this H-AGI loop to a live question:

“Why aren’t my articles about human–artificial general intelligence (H-AGI) having the impact they need to have?” 😀

In Part 2 we’ll run this loop — so you keep agency, make a causal why-story, and use AI strictly where it’s strong.

Instead of pasting a vague prompt into a bot and passively trying to follow whatever comes back, we’ll run the full 4-beat H-AGI loop on the “low-impact but high-quality content” problem, in which we effectively tap the synergy between the strengths of human general intelligence (G) and artificial general intelligence (AGI).

Human Embodiment

We will also show how H-AGI is rooted in three principles that are embodied in our biology and culture, which AI can never be a part of. Namely:

Ensuring a balance of challenge and what we know well ensuring the process is fun and motivating. When activities are fun - often when we are in ‘flow states’ - we have a clear signal that we are not over-reaching or staying too much in a comfort zone, a safe zone.

Ensuring a balance between control and creativity, explanation and possibility. Unlike AI which aims to minimise uncertainty and maximise control, we thrive on some uncertainty and passive sponteneity - both needed for creativity - in our ever-evolving lives.

Ensuring that we are balanced between (1) an ‘objective’ outlook concerning reality, truth & performance, as well as a subjective outlook concerning personal experience, feelings, and meaning; and (2) a self-interested, autonomous and authentic outlook, as well as an interpersonal and norm-based outlook which prioritises how others see and value us.

Comments

Let us know if you have any thoughts on the concept of H-AGI below. Debates tend to compare and contrast human general intelligence with AI general intelligence (AGI) but there is little discussion of the potential for hybrid intelligence. Comments?

This is excellent. With regard to H-AGI, John Keats coined a phrase "negative capability" which seems to fit here...with regard to creativity, art making, writing, story-telling "...what quality went to form a Man of Achievement, especially in Literature, and which Shakespeare possessed so enormously—I mean Negative Capability, that is, when a man is capable of being in uncertainties, mysteries, doubts, without any irritable reaching after fact and reason—"

To me it is a sort of a resilience, that can make/allow space for conflict and confusion I would think AI could learn to fake this, but alas, can never feel it, the emotional intelligence that allows human ability to balance between confusion and certainty, pride and humility, as well as meekness and strength. Looking forward to my upcoming training.